1. Accuracy

Accuracy describes the closeness between an estimated result and the (unknown) true value.

The target population for the key stage 2 data collection is all pupils who are at the end of key stage 2 in England. However, key stage 2 assessments are only mandatory for state-funded schools. In 2025, 162 independent schools (out of approximately 1300 independent schools with the appropriate age range) took part in the assessments. Therefore in the provisional and revised publications, we provide a national figure for state funded schools only and a national figure for all schools that participated in the assessments. Pupils who are home-schooled are not required to take part in the assessments.

The provisional KS2 data is based on test and teacher assessment data provided to the department by STA in July. It contains test results for all pupils who took the KS2 tests (although some of these may subsequently be updated following a successful marking review or the completion of a maladministration investigation).

In the provisional 2025 data, no test results were suppressed due to maladministration investigations, unchanged from 2023. 13 results had been annulled due to confirmed maladministration, unchanged since 2024. 1 test result was annulled or removed due to pupil cheating, compared to 2 in 2024.

In the revised 2025 data, 16 test results were annulled and no results were suppressed pending maladministration investigations. 1 test result was annulled due to pupils cheating. Teacher assessments had been submitted for 99.6% of pupils. They can therefore be considered representative of all schools that took part in the assessments.

The department have become aware there are a small number of schools missing KS2 writing teacher assessment data as a result of data input issues in the KS2 revised school level data published on the Compare School and College Performance (CSCP) service in December 2025, this can also impact reading, writing and maths (combined) attainment. The correct data will be included in KS2 final data published on CSCP in April. ASP will be amended to include missing KS2 writing data in March.

1.1 Guidance, monitoring & marking

Clear guidance is provided to schools regarding the administration of the key stage 2 tests (opens in new tab), including instructions for keeping the test materials secure prior to the tests and storage of completed scripts until they are collected for marking.

Local Authorities monitor the administration of the tests (opens in new tab) in the schools they are responsible for and make unannounced visits to at least 10 per cent of those schools, before, during and after the test period. STA representatives may also make monitoring visits.

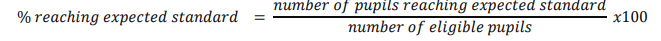

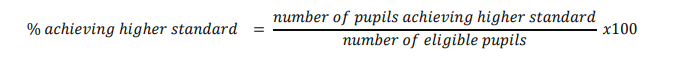

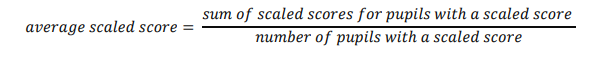

The tests are externally marked by STA to ensure that marking is consistent between schools. Once the tests have been marked the expected standard is set. Pupils who achieve exactly the expected standard will have a scaled score of 100. After the expected standard has been set, a statistical technique called ‘scaling’ is used to transform the raw score into a scaled score. The scaled score runs from 80 to 120.

There is guidance to explain how key stage 2 teacher assessments in English reading, English writing, mathematics and science, should be produced and submitted to STA (opens in new tab). Writing teacher assessment is subject to moderation by LAs (opens in new tab) because it is used in the headline attainment measures.

The key stage 2 teacher assessment collection remains open for three months after the initial collection deadline so that revised data can be submitted to correct any errors identified by schools or LAs after submission of data.

STA operates a helpline to assist LAs and schools that are having difficulty submitting data. The helpline will also contact any LAs and schools who they believe may be having problems submitting their data. The STA monitors the level of returns and the helpdesk contacts LAs and schools with outstanding data as the submission deadline approaches.

1.2 Dry run

The production process is subject to a ‘dry run’ during the summer. This involves producing a dummy dataset from the previous year’s dataset which conforms to how the current year’s data will be supplied. This dummy dataset is used to test the data matching and processing conducted by the Department for Education as part of the statistics production process. The dummy dataset is also used to test the department’s checking processes. This allows potential problems to be resolved prior to the receipt of the live data.

1.3 Checks applied by STA

Test scripts

Every result is passed through a result validation engine to identify errors. Validation is data driven using the values as listed in the national curriculum outcome codes set out in section 4.1. In addition to basic field validation of permitted values, more complex results have specific cross-field validation to ensure multiple field consistency.

Checks are also carried out to make sure that where a script exists in one component of a test, the other components of the test are consistent (a script also exists or an absent code is present). For example, a child cannot have sat one component of the test and be recorded as ‘B’ (below the standard) in another component. In these cases, the inconsistent codes will be changed to absent and an absent overall code will be applied to that subject.

Teacher assessment

Teacher assessment data is collected from schools (and LAs that have chosen to submit on behalf of their schools) via the Primary Assessment Gateway (a system used by STA to manage and collect information from schools about KS2 tests and teacher assessment). Schools and LAs can upload their data using a CTF extract from their MIS provider or input the data into a spreadsheet template, which includes the details of the children we are expecting data for.

The MIS will include a number of validation rules which check that the data entered is valid and alert the school to correct the data if not. The Primary Assessment Gateway will validate this data on upload and provide schools and LAs with warning and error messages where appropriate to allow them to correct any identified issues.

Once the data has been validated, it is automatically matched to children using a matching algorithm. A single match (and no more) must exist in order for teacher assessment results to be linked to a child. If no match exists then the record is manually reviewed by STA.

Occasionally a school may contact STA if they have provided teacher assessment data for a child that was not recorded at that school for the tests and the teacher assessment will only be included if they provide a valid reason as to why the child did not sit the test (usually because they were below the standard or absent on the day of the test).

1.4 Checks applied by DfE

At every stage in the data cycle, the department checks selected calculations used in the production of the figures. The department carries out checks on the data to ensure that the files comply with the specified format and contain the correct information. Selected indicators at school level, LA and national level are re-derived by an independent team to ensure the data production pipeline is programmed correctly.

All data in the underlying data are produced by one person and quality checked by another. Key tables are dual run by two people independently. Any discrepancies in the data produced are discussed and more experienced staff involved as required to agree the correct figures. Additional checks are also carried out on the data produced.

1.5 Dual registered pupils

Occasionally, more than one school may register the same pupil for the test (for example, if pupils change school or are dually registered). The vast majority of these cases are resolved when test scripts and attendance registers are received. However, in a small number of cases this is not possible, normally where a pupil is working below the level of the tests. In these cases, STA contact the schools involved to establish which school the pupil was attending during test week and where the results should be assigned.

1.6 The review process

After marking, schools can view scanned images of their pupil’s tests scripts and request a review of the marking if they believe that that there is a discrepancy between how questions have been marked and the published mark scheme. Schools are only encouraged to apply for a review if they thought it would result in a change leading to a pupil reaching or not reaching the expected standard or a change of 3 or more marks to the raw score. Outcomes of reviews are not reflected in the provisional data but are included in the revised data. Annex A provides detailed information about the reviews conducted from 2019.

1.7 The performance tables checking exercise

As a further check of the accuracy of the underlying data, the key stage 2 data is also collated into school level information and shown to schools, together with the underlying pupil data during the performance tables checking exercise. Schools are required to check the data and notify the department of any pupils that are included in their school in error, or of any missing pupils. Schools can also notify us of any other errors in the data such as errors in matching prior attainment results. Any changes requested are validated to ensure that they comply with the rules before being accepted. Schools are also able to apply for pupils to be discounted from their figures, if they have recently arrived from overseas and their first language is not English. We allow the removal of these pupils from the regional, LA, LAD, parliamentary constituency and school figures. However, we continue to include these pupils in the national figures so that they reflect the attainment of all pupils.

1.8 Maladministration

STA may investigate any matter brought to its attention where there is doubt over the accuracy or correctness of a child’s results in the tests. Results for schools under investigation may be withheld until the investigation is complete. Each year, a few schools have their results amended or annulled because they do not comply with the statutory arrangements. Maladministration (opens in new tab)can lead to changes to, or annulment of, results. It can apply to whole cohorts, groups of children, individual children or individual tests.

1.9 Disclosure control

The Code of Practice for Official Statistics requires us to take reasonable steps to ensure that our published or disseminated statistics protect confidentiality.

Suppression is applied to the corresponding values for any local authorities with one school and for eligible pupils with fewer than six pupils in both the provisional and revised publications. Regional eligible pupil figures are rounded to the nearest 10 when suppression is applied so that it is not possible to derive figures for these LAs by summing the figures for the other LAs in the region.

1.10 Other

Following publication of the performance tables, some schools notify us of further changes required in the data. These changes are validated in the same way as those that are received during the checking exercise and final data is produced.

2. Reliability

Reliability is the extent to which an estimate changes over different versions of the same data.

The key stage 2 data is subject to greater change between provisional and revised data as the revised data contains:

- outcomes of the appeals process where schools ask for reviews for one or more of their pupils in the belief that a clerical error has been made or the mark scheme has not been correctly applied;

- changes resulting from the completion of maladministration investigations;

- changes resulting from requests from schools to remove pupils who have recently arrived from overseas.

- any additional or revised teacher assessments.

However, the national figures typically show no change between interim, provisional, revised and final data, although occasionally there may be a change of +/- 1 percentage point. Table 1 shows the change in the headline measure over the last seven years.

Table 1: Change in key stage 2 headline measure 2016 to 2025

| % of pupils meeting the expected standard in reading, writing and mathematics | 2016 | 2017 | 2018 | 2019 | 2022 | 2023 | 2024 | 2025 |

|---|

| Interim | 53% | 61% | 64% | 65% | 59% | 59% | 61% | 62% |

| Provisional | 53% | 61% | 64% | 65% | 59% | 59% | 61% | 62% |

| Revised | 53% | 61% | 64% | 65% | 59% | 60% | 61% | 62% |

| Final | 53% | 61% | 64% | 65% | 59% | 60% | 61% | - |

Again, changes in the LA figures can be slightly larger, largely due to the removal of pupils recently arrived from overseas with English as an additional language. In 2025, 117 of the 151 LAs (excluding City of London and Isles of Scilly) had a change in the percentage of pupils reaching the expected standard in reading, writing and mathematics between provisional and revised data. However, the majority of these changes were between 1 and 2 percentage points and 1 LA had a change of 6 percentage points.

3. Coherence

Coherence is the degree to which the statistical processes, by which two or more outputs are generated, use the same concepts and harmonised methods.

We use the same methodology to produce the data within our publications and the performance tables. We also use a dataset produced at the same time for the performance tables and the revised publication. As a result, the national and LA figures included in both the revised publication and the performance tables will match.

4. Comparability

Comparability is the degree to which data can be compared over time, region or other domain.

4.1 Over time

There have been a number of changes to primary school assessment over time which can make comparisons over time difficult. These changes are listed in annex C.

- For the test subjects (reading, maths and GPS), the expected standard in 2025 can be compared to 2024, 2023, 2022 and 2016-2019 data.

- For writing TA, the expected standard in 2025 can be compared to 2024, 2023, 2022 and 2018 and 2019 data only, due to new frameworks being introduced in 2017/18.

- For science TA, the expected standard in 2025 can be compared to 2024, 2023, 2022 and 2019 data only, due to frameworks being modified in 2018/19.

For more information see the Teacher Assessment frameworks (opens in new tab).

4.2 Differences between national, local authority, local authority district and parliamentary constituency figures

The figures published in the national key stage 2 tables include any results from independent schools but results from these schools are excluded from the local authority (LA) figures. There are also some differences in the pupils included in the national and school level figures. Pupils with ‘pending maladministration’ (S), ‘missing’ (M) and ‘pupil took the test/was assessed in a previous year’ (P [1]) are normally included in the LA, LAD and parliamentary constituency level figures but are not included in the national figures. Similarly, where schools ask for overseas pupils to be discounted, these pupils will be removed from the LA figures but remain included in the national figures so that these reflect the attainment of all pupils. A national figure calculated on the same basis as the LA figures is included in the LA tables for comparison purposes.

[1] Pupils with P will normally have the P replaced with their previous result if it could be found. If a previous result cannot be found, the pupils result will be left as P and treated as missing.

4.3 Across different types of school

Care needs to be taken when making comparisons across school types as schools can change type over time. For example, a simple comparison of the published figures for converter academies over time may be misleading because the number of converter academies has increased over this period so the same schools are not included each time. Any changes seen could be because the schools added into this category have different attainment to those which were already there, rather than that the results for these schools have improved or declined.

Even when we restrict our comparisons to the same group of schools over time (for example, academies that have been open for 3 years), we need to be aware that different types of schools will have had different starting points and this may affect their ability to improve. For example, sponsored academies generally start with lower attainment so have lots of potential to improve, however, converter academies generally have higher levels of attainment so have much less room for improvement.

4.4 With other parts of the UK

The Welsh Government publishes attainment data for schools in Wales. As in England, the national curriculum is divided into key stages and pupils are assessed at the end of key stage 1, 2 and 3 at ages 7, 11, and 14 respectively. Statutory assessment in Wales is by teacher assessments for all key stages. Further information is available on the Welsh Government website. (opens in new tab)

The Scottish Government measures attainment nationally using the Scottish Survey of Literacy and Numeracy (SSLN), an annual sample survey of pupil attainment in primary and early secondary school. Further information is available on the Scottish Government website (opens in new tab).

Information on educational attainment for post-primary schools in Northern Ireland is available from the Northern Ireland Statistics and Research Agency (opens in new tab).

These assessments are not directly comparable with those for England.

4.5 International comparisons

Pupils in England also take part in international surveys such as the Trends in International Mathematics and Science Study (TIMSS) and Progress in International Reading Study (PIRLS). TIMMS is a comparative international survey of mathematics and science achievement of 9-10 year olds and 13-14 year olds, carried out on pupils from a sample of schools. PIRLS is an international study of how well 9-10 year olds can apply knowledge and skills in reading.

Pupils in England also participate in the Programme for International Student Assessment (PISA), organised by the Organisation for Economic Co-operation and Development (OECD). This assessment aims to compare standards of achievement for 15-year olds in reading, mathematics and science, between participating countries. This study is based on pupils from a sample of schools.

5 . Timeliness

Timeliness refers to the lapse of time between the period to which the data refer and the publication of the estimates.

Key stage 2 tests take place in the third week of May and schools are required to submit key stage 2 teacher assessments to STA by the end of June.

Interim key stage 2 national data are published in early July, the same day that assessment results are released to schools. This is less than two weeks after the deadline for submission of teacher assessments.

Provisional key stage 2 data are published in early September, 10 weeks after the deadline for submission of teacher assessments. The data contains information about all pupil characteristics including disadvantage and phonics prior attainment.

Revised key stage 2 data including school level data are published in mid-December: At this point, the data contains the outcomes of marking reviews and maladministration investigations.

During this period, the data are quality assured, matched with other data and processed to produce the statistical publication outputs.

6. Punctuality

Punctuality refers to the time lag between the actual and planned dates of publication.

The proposed month of publication is announced on gov.uk at least twelve months in advance and precise dates are announced in the same place at least four weeks prior to publication. In the unlikely event of a change to the pre-announced release schedule, the change and the reasons for it would be announced. In 2019 the Revised publication was moved back by a day, in line with ONS guidance, due to a general election.

Prior to 2019, the only occasion when any primary attainment publication had been delayed was in 2008. In 2008, there were problems with delivery of the national curriculum tests at key stage 2. Provisional key stage 2 data was published on schedule in August but publication of the revised data that was due in December 2008 was delayed until 1 April 2009.